We have learned previously how to scrape the content of sites in a previous lesson. In this lesson i will show how to scrape javascript based websites using a symfony powerful package “symfony/panther”.

Requirements

- Laravel 8

- Symfony/panther

- Laravel livewire

- Tailwindcss

If you want to return to my previous lesson about scraping just click this link. The problem we faced in the previous lesson is that there are websites that are difficult to scrape using traditional techniques which are the javascript rendered sites. From these websites the Single Page Apps, such as websites build with Reactjs or Vuejs tools. To scrape such websites we require another technique to be scraped. Some tools already provide these techniques like Phantomjs and Google Puppeteer. But in fact those tools require some experience with nodejs.

Fortunately in php there is a symfony like package which is symfony/panther . This package works in the same way as Phantomjs and Puppeter and i will demonstrate how to use it below, but let’s first illustrate how panther works.

Panther works by opening a virtualor headless browser in a background service using socket connection and specific port. Once opened inside the open socket connection we can use the normal CSS or XPath (xml path language) expressions to select and query elements we want to scrape.

I will use laravel 8 to demonstrate my example so ensure that you have php 8 installed in your machine. In this example we will scrape a products page in an e-commerce website.

So create a new laravel project using composer:

composer create-project laravel/laravel panther_scrape

Also install livewire:

composer require livewire/livewire

Laravel livewire is a powerful mini framework for building modern apps like Vuejs but using laravel components. To learn more about livewire head into livewire docs.

You can publish the livewire config file using:

php artisan livewire:publish --config

Once this is done you will find config/livewire.php file. In this file the most important setting is asset_url you will find it like so:

'asset_url' => env('APP_URL'),

Which retrieves it from .env file. Sometimes you need to update APP_URL to the full project url like this:

APP_URL=http://localhost/panther_scrape/public

Next run this command also to install npm dependencies:

npm install

After this command is completed let’s install tailwindcss. Tailwindcss is a css library but unlike libraries such as bootstrap, Tailwindcss gives you a bunch of classes that enable you to build your own component with ease. Of course you can use bootstrap or materialize.

Install tailwindcss:

npm install -D tailwindcss@latest postcss@latest autoprefixer@latest

Then create the tailwind related config file using:

npx tailwindcss init

This will create tailwind.config.js in the root directory of your project. This file contains various configurations for tailwindcss. The next step is to configure tailwind with laravel mix.

Include tailwindcss in webpack.mix.js like so:

mix.js('resources/js/app.js', 'public/js')

.postCss('resources/css/app.css', 'public/css', [

require("tailwindcss")

]);

Then open resources/css/app.css and include tailwindcss:

@tailwind base; @tailwind components; @tailwind utilities;

Finally run npm run dev to generate the app.css file in public/ directory. In the next section we will prepare the livewire components and pages.

Preparing livewire components

For the purpose of our example we will create two livewire components that will represent two pages one is the scraper form and another page that will display the scraped products:

Run those command in your project root:

php artisan make:livewire ScraperForm php artisan make:livewire Products

Once you run this commands some files will be created in app/Http/Livewire and resources/views/livewire. Each livewire component have two files, a php class located in app/Http/Livewire and a view file located in resources/views/livewire.

If you worked with Vuejs before you will find it is the same concept but the advantage here is written as php not javascript. The component class contains the state and actions and contains a render() method which return the component view.

For example the ScrapeForm component render method:

public function render()

{

return view('livewire.scraper-form');

}

Now update the routes in routes/web.php

<?php

use App\Http\Livewire\Products;

use App\Http\Livewire\ScraperForm;

use Illuminate\Support\Facades\Route;

Route::get('/', Products::class);

Route::get('/scrape', ScraperForm::class);

As you see it’s possible that you make the entire livewire component as a route just like controllers. So when the user navigates to the base url “/” it will show the products page and when navigate to the “/scrape” page it will show the scraper form, but for this to work let’s make the app layout and update each component view.

Preparing the main layout and components

Create this view resources/layouts/app.blade.php

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Scraper</title>

<link href="{{ asset('css/app.css') }}" rel="stylesheet" />

@livewireStyles

</head>

<body>

<div class="container mx-auto">

<header class="bg-gray-400 flex justify-start pt-4 pb-4 space-x-4 shadow mb-4">

<a href="{{ url('/') }}" class="ml-4 bg-red-700 p-2 text-white rounded hover:bg-black">All Products</a>

<a href="{{ url('/scrape') }}" class="bg-green-800 p-2 text-white rounded hover:bg-black">Scrape</a>

</header>

{{ $slot }}

</div>

@livewireScripts

<script type="text/javascript" src="{{ asset('js/app.js') }}"></script>

@stack('scripts')

</body>

</html>

This is the main layout and i added the livewire related style and scripts using the special directives @livewireStyles and @livewireScripts. This is essential so that livewire can render the components. I included the app.css and app.js files which generated from npm run dev. I added two links to navigate to the two pages. and styled them using tailwindcss as shown. The special $slot is a laravel reserved variable used when rendering components instead of @yield.

In addition to that update the below component views:

resources/views/livewire/scraper-form.blade.php

<div>

<h2 class="text-3xl font-bold">Enter url to scrape</h2>

<form method="post" class="mt-4" wire:submit.prevent="scrape">

<div class="flex flex-col mb-4">

<label class="mb-2 uppercase font-bold text-lg text-grey-darkest">Url</label>

<input type="text" class="border border-gray-400 py-2 px-3 text-grey-darkest placeholder-gray-500" name="url" id="url" placeholder="Url..." />

<button type="submit" class="block bg-red-400 hover:bg-teal-dark text-lg mx-auto p-4 rounded mt-3">Add to queue</button>

</div>

</form>

</div>

resources/views/livewire/products.blade.php

<div>

@foreach($products as $product)

<div class="max-w-md mx-auto bg-white rounded-xl shadow-md overflow-hidden md:max-w-2xl">

<div class="md:flex">

@if($product['image_url'])

<div class="md:flex-shrink-0">

<img class="h-48 w-full object-cover md:w-48" src="{{ $product['image_url'] }}">

</div>

@endif

<div class="p-8">

<div class="uppercase tracking-wide text-sm text-indigo-500 font-semibold">{{ $product['price'] }}</div>

<a href="{{ $product['product_url'] }}}" target="_blank" class="block mt-1 text-lg leading-tight font-medium text-black hover:underline">{{ $product['title'] }}</a>

</div>

</div>

</div>

@endforeach

</div>

In the scraper-form file i added a simple form so that the user can add the url to scrape, you might notice this attribute wire:submit.prevent=”scrape”, this is like in Vuejs v-on. Livewire actions and bindings start with “wire:” like wire:submit, wire:click, wire:model so when the user submit the form it will trigger the scrape action, we will define this action as a method in the component class below. The .prevent directive is to prevent the default behavior and not to reload the page.

In the products view we iterate over the $products variable which we will declare below and display each product..

Next update app/Http/Livewire/Products.php

<?php

namespace App\Http\Livewire;

use Livewire\Component;

class Products extends Component

{

public $products = [];

public function mount()

{

$this->products = [

[

"id" => 1,

"title" => "Sprite Waterlymon 425 mL",

"price" => "5.100",

"product_url" => "https://shopee.co.id/Sprite-Waterlymon-425-mL-i.127192295.4501804525",

"image_url" => "https://cf.shopee.co.id/file/feeab4341caa2c43b104a995709df777_tn"

],

[

"id" => 2,

"title" => "Sprite Waterlymon 425 mL",

"price" => "5.100",

"product_url" => "https://shopee.co.id/Sprite-Waterlymon-425-mL-i.127192295.4501804525",

"image_url" => "https://cf.shopee.co.id/file/feeab4341caa2c43b104a995709df777_tn"

],

[

"id" => 3,

"title" => "Sprite Waterlymon 425 mL",

"price" => "5.100",

"product_url" => "https://shopee.co.id/Sprite-Waterlymon-425-mL-i.127192295.4501804525",

"image_url" => "https://cf.shopee.co.id/file/feeab4341caa2c43b104a995709df777_tn"

]

];

}

public function render()

{

return view('livewire.products');

}

}

Update app/Http/Livewire/ScraperForm.php

<?php

namespace App\Http\Livewire;

use Livewire\Component;

class ScraperForm extends Component

{

public function scrape()

{

dd("start fetching")

}

public function render()

{

return view('livewire.scraper-form');

}

}

As you see in Products class i declared the public $products property that we used in the view above. And in the mount() hook i initialized the property with some dummy products. The mount() hook is a special livewire hook that executed once after the component initialization and before rendering, this is also the same as Vuejs mounted() hook.

In the ScraperForm class i added the public scrape() method which act as an action you see above when we submit the form it will trigger this action. Livewire is smart enough to map the public class properties and methods into javascript events and objects.

Now we will install symfony/panther and prepare some database in the next section.

Installing Panther

Run this command in terminal

composer req --dev symfony/panther

You may encounter errors during panther installation in laravel as this package not laravel package. So to resolve this just remove this line in composer.json

“@php artisan package:discover –ansi”

and after installation insert it again.

Installing Drivers

The next step required so that panther can work is by installing the browser drivers that will be used when panther open virtual browsers. Panther uses the webdriver protocal and utilizes chromedriver for chrome and geckodriver for firefox. So you must have one of these drivers installed in your machine.

There are two methods to install those drivers:

- First method through composer

composer require --dev dbrekelmans/bdi

Then confirm that the drivers successfully installed using this command:

vendor/bin/bdi detect drivers

- The second method is to install them in your system and make them available through system environment variables.

On Ubuntu, run:

apt-get install chromium-chromedriver firefox-geckodriver

On Mac, using Homebrew:

brew install chromedriver geckodriver

On Windows, using chocolatey:

choco install chromedriver selenium-gecko-driver

In my case i will choose the first method and install it through composer. Once the drivers installed you will find driver/ directory appear in the root of your project and contain two files chromedriver and geckodriver.

Basic usage:

use Symfony\Component\Panther\Client;

use Symfony\Component\Panther\DomCrawler\Crawler;

$url = "https://shopee.co.id/shop/127192295/search";

$client = Client::createChromeClient(base_path("drivers/chromedriver"), null, ["port" => 9558]); // create a chrome client

$crawler = $client->request('GET', $url);

$client->waitFor('.shopee-page-controller'); // wait for the element with this css class until appear in DOM

echo $crawler->filter('.shopee-page-controller')->text();

This above code creates a chrome client and we passed the path of the chromedriver located in drivers/ directory, if left blank it will assume that you have the chromedriver available in your system. Also i passed an array in the third argument with the custom port number. Next i created a crawler using $client->request(). The most important line is $client->waitFor() which accepts a css selector to wait for it until page load complete and this item appear in the DOM. Finally i retrieved the item inner text using $crawler->filter()->text().

Notice that symfony/panther utilizes symfony dom crawler, you can learn more by clicking this link.

Let’s take further step and setup the database table to hold the data we will scrape. So create a new mysql database using phpmyadmin and update your project .env file db settings like so:

DB_CONNECTION=mysql DB_HOST=127.0.0.1 DB_PORT=3306 DB_DATABASE=panther_scrape DB_USERNAME=<db username> DB_PASSWORD=<db password>

You might need to run those commands to clear the cache config:

php artisan config:clear php artisan config:cache php artisan cache:clear

Create a new laravel migration:

php artisan make:migration create_products_table

Next open the created migration file and update the up() method like so:

Schema::create('products', function (Blueprint $table) {

$table->id();

$table->string('title');

$table->string('price')->nullable();

$table->string('product_url');

$table->string('image_url')->nullable();

$table->timestamps();

});

Then migrate the tables using:

php artisan migrate

After successful generation you will find the tables created in the database. Next create the Product model

php artisan make:model Product

We will scrape content from this site page “https://shopee.co.id/shop/127192295/search” as an example, but let’s inspect what we will need so that the symfony/panther work:

- A console command so that symfony/panther can run from CLI mode.

- A queue so that when called from browser it will queued and saved into db to be processed later.

- A database table to hold the queue jobs.

- A background service that monitor the queue jobs and process them. In linux i will use supervisor, in windows you can check this article.

At first let’s create a console command that will contain the code to process the url using symfony/panther.

In terminal run:

php artisan make:command CrawlUrlCommand

After running this a new class CrawlUrlCommand.php generated in app/Console/Commands.

Open CrawlUrlCommand.php and update with this code:

<?php

namespace App\Console\Commands;

use App\Models\Product;

use Illuminate\Console\Command;

use Symfony\Component\Panther\Client;

use Symfony\Component\Panther\DomCrawler\Crawler;

class CrawlUrlCommand extends Command

{

/**

* The name and signature of the console command.

*

* @var string

*/

protected $signature = 'Url:Crawl {url}';

/**

* The console command description.

*

* @var string

*/

protected $description = 'Crawl url using panther';

/**

* Create a new command instance.

*

* @return void

*/

public function __construct()

{

parent::__construct();

}

/**

* Execute the console command.

*

* @return int

*/

public function handle()

{

$url = $this->argument('url');

$_SERVER['PANTHER_NO_HEADLESS'] = false;

$_SERVER['PANTHER_NO_SANDBOX'] = true;

try {

$client = Client::createChromeClient(base_path("drivers/chromedriver"), null, ["port" => 9558]);

$this->info("Start processing");

$client->request('GET', $url);

$crawler = $client->waitFor('.shopee-page-controller');

$crawler->filter('#main > div > div._193wCc > div.shop-search-page.container > div > div.shop-search-page__right-section > div > div.shop-search-result-view > div > div.col-xs-2-4')->each(function (Crawler $parentCrawler, $i) {

// DO THIS: specify the parent tag too

$titleCrawler = $parentCrawler->filter('#main > div > div._193wCc > div.shop-search-page.container > div > div.shop-search-page__right-section > div > div.shop-search-result-view > div > div.col-xs-2-4 div._36CEnF');

$priceCrawler = $parentCrawler->filter('#main > div > div._193wCc > div.shop-search-page.container > div > div.shop-search-page__right-section > div > div.shop-search-result-view > div > div.col-xs-2-4 ._29R_un');

$imageCrawler = $parentCrawler->filter('#main > div > div._193wCc > div.shop-search-page.container > div > div.shop-search-page__right-section > div > div.shop-search-result-view > div > div.col-xs-2-4 img.mxM4vG');

$urlCrawler = $parentCrawler->filter('#main > div > div._193wCc > div.shop-search-page.container > div > div.shop-search-page__right-section > div > div.shop-search-result-view > div > div.col-xs-2-4 a[data-sqe="link"]');

$product = new Product();

$product->title = $titleCrawler->getText() ?? "";

$product->price = $priceCrawler->getText() ?? "";

$product->product_url = $urlCrawler->getAttribute("href") ?? "";

$product->image_url = $imageCrawler->getAttribute("src") ?? "";

$product->save();

$this->info("Item retrieved and saved");

});

$client->quit();

} catch (\Exception $ex) {

$this->error("Error: " . $ex->getMessage());

dd($ex->getMessage());

} finally {

$this->info("Finished processing");

$client->quit();

}

}

}

I changed the command signiture to be “Url:Crawl url” so that when called from command line you pass the url of the page you want to crawl. The handle() method contain the code that initialize the panther instance and fetching the products.

In the try catch block i created a chrome client using Client::createChromeClient() as you see above after that using the crawler->filter(selector) method we can retrieve a list of items so i chained it using ->each() method which in turn accept a callback, inside this callback i retrieved every single element from the parent item, in this case the product and also using $parentCrawler->filter() to get title, price, etc.

Then after retrieving every item i created a new product model and i can call the getText() or getAttribute() methods available in symfony DomCrawler to get the inner text and attribute value. For example to get the title i used $titleCrawler->getText(). Finally i saved the product.

It’s important to call $client->quit() to shut down the client after processing finished as you see above i called it in the finally {} block and after saving the product.

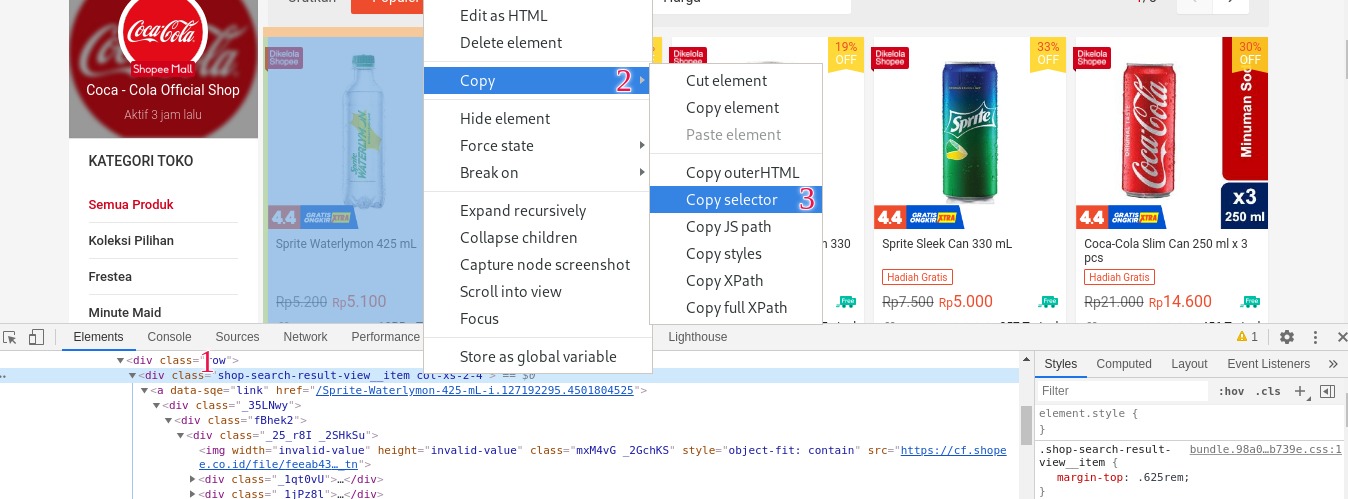

You may be wondering how i constructed this long selector, in fact you can construct it yourself from the browser devtools or using chrome devtools you can do something like in this screenshot:

Now give this command a try:

php artisan Url:Crawl https://shopee.co.id/shop/127192295/search

If this command runs successfully you will see the products successfully stored in the database.

Using this command we need to execute it when we click the scrape button from the browser. But we can not execute it directly from the browser so we have to run panther using queue in some way and using a background service to monitor and execute it. Now let’s use laravel queues to handle that.

Open .env and update QUEUE_CONNECTION

QUEUE_CONNECTION=database

Next run those two commands to publish and migrate the jobs tables:

php artisan queue:table php artisan migrate

This will create two tables for the jobs and failed jobs.

Updating ScraperForm component

app/Http/Livewire/ScraperForm.php

<?php

namespace App\Http\Livewire;

use Illuminate\Support\Facades\Artisan;

use Livewire\Component;

class ScraperForm extends Component

{

public $url;

public $startScrape = false;

protected $rules = [

'url' => 'required|url'

];

public function scrape()

{

$this->validate();

try {

Artisan::queue("Url:Crawl", ["url" => $this->url])

->onConnection('database')->onQueue('processing');

$this->url = "";

$this->startScrape = true;

session()->flash("success", "Url Added Successfully to queue and it will be processed shortly!");

} catch (\Exception $ex) {

dd($ex);

}

}

public function updated($propertyName)

{

$this->validateOnly($propertyName);

}

public function render()

{

return view('livewire.scraper-form');

}

}

resources/views/livewire/scraper-form.blade.php

<div>

<h2 class="text-3xl font-bold">Enter url to scrape</h2>

<form method="post" class="mt-4" wire:submit.prevent="scrape">

@if(session()->has("success"))

<div class="bg-green-600 text-white rounded p-4 flex justify-start items-center">

<div>

<svg class="w-10" xmlns="http://www.w3.org/2000/svg" fill="none" viewBox="0 0 24 24" stroke="currentColor">

<path stroke-linecap="round" stroke-linejoin="round" stroke-width="2" d="M9 12l2 2 4-4m6 2a9 9 0 11-18 0 9 9 0 0118 0z" />

</svg>

</div>

<span>

{{ session("success") }}

</span>

</div>

@endif

<div class="flex flex-col mb-4">

<label class="mb-2 uppercase font-bold text-lg text-grey-darkest">Url</label>

<input type="text" wire:model.debounce.500ms="url" class="border border-gray-400 py-2 px-3 text-grey-darkest placeholder-gray-500" name="url" id="url" placeholder="Url..." />

@error('url') <span class="text-red-700">{{ $message }}</span> @enderror

<button type="submit" wire:loading.attr="disabled" class="block bg-red-400 hover:bg-teal-dark text-lg mx-auto p-4 rounded mt-3">Add to queue</button>

</div>

</form>

</div>

Now if you click on the scrape button and inspect the jobs table you will find a new job saved with the payload and queue. The next step is to setup supervisor in linux to execute this job, if you are on windows search for how to setup background service.

Supervisor Setup

Ubuntu

apt-get install supervisor

Centos & Fedora

sudo yum update -y sudo yum install epel-release sudo yum update sudo yum -y install supervisor sudo systemctl start supervisord sudo systemctl enable supervisord sudo systemctl status supervisord

Next create a supervisor config file for the queue. Typically supervisor config files located in /etc/supervisord.d

Create a new file /etc/supervisord.d/laravel-worker.ini

[program:laravel-worker] process_name=%(program_name)s_%(process_num)02d command=php /var/www/html/panther_scrape/artisan queue:work database --queue=processing --tries=3 --max-time=3600 autostart=true autorestart=true stopasgroup=true killasgroup=true numprocs=8 user=root redirect_stderr=true stdout_logfile=/var/www/html/panther_scrape/worker.log stopwaitsecs=3600

Then update /etc/supervisord.conf make sure this line at the end of the file:

[include] files = supervisord.d/*.ini

After updating this file do restart supervisor:

sudo supervisorctl reload

Once restarted it will execute the laravel queue, you can also check the worker.log that will be created in the root or your project. Check the database products table once products gets added, let’s display those products.

Displaying Products

app/Http/Livewire/Products.php

<?php

namespace App\Http\Livewire;

use Livewire\Component;

use App\Models\Product;

use Livewire\WithPagination;

class Products extends Component

{

use WithPagination;

public function render()

{

return view('livewire.products', [

'products' => Product::orderBy("id", "DESC")->paginate(10)

]);

}

}

resources/views/livewire/products.blade.php

<div>

@foreach($products as $product)

<div class="max-w-md mx-auto bg-white rounded-xl shadow-md overflow-hidden md:max-w-2xl">

<div class="md:flex">

@if(!empty($product['image_url']))

<div class="md:flex-shrink-0">

<img class="h-48 w-full object-cover md:w-48" src="{{ $product['image_url'] }}" />

</div>

@endif

<div class="p-8">

<div class="uppercase tracking-wide text-sm text-indigo-500 font-semibold">{{ $product['price'] }}</div>

<a href="{{ str_replace("%7D", "", $product['product_url']) }}" target="_blank" class="block mt-1 text-lg leading-tight font-medium text-black hover:underline">{{ $product['title'] }}</a>

</div>

</div>

</div>

@endforeach

{{ $products->links() }}

</div>

Optional: Event Notification When Product Inserted

This is an optional feature in this tutorial, if you need to make the scraper form more interactive you can use laravel events to broadcast event when product inserted. For this i will use pusher events so first go to puser.com, signin and create a new app.

Open .env and make these updates:

BROADCAST_DRIVER=pusher

PUSHER_APP_ID=<pusher id>

PUSHER_APP_KEY=<pusher key>

PUSHER_APP_SECRET=<pusher secret>

PUSHER_APP_CLUSTER=eu

MIX_PUSHER_APP_KEY="${PUSHER_APP_KEY}"

MIX_PUSHER_APP_CLUSTER="${PUSHER_APP_CLUSTER}"

Install pusher sdk:

npm install pusher-js

Make sure also that laravel-echo installed if not install it using:

npm install laravel-echo

Include laravel echo in resources/js/bootstrap.js

import Echo from 'laravel-echo';

window.Pusher = require('pusher-js');

window.Pusher.logToConsole = true;

window.Echo = new Echo({

broadcaster: 'pusher',

key: process.env.MIX_PUSHER_APP_KEY,

cluster: process.env.MIX_PUSHER_APP_CLUSTER,

forceTLS: true

});

Then run npm run dev to regenerate the app.js.

To broadcast an event class needed so create a new laravel event:

php artisan make:event ProductReceived

app/Events/ProductReceived.php

<?php

namespace App\Events;

use App\Models\Product;

use Illuminate\Broadcasting\Channel;

use Illuminate\Broadcasting\InteractsWithSockets;

use Illuminate\Contracts\Broadcasting\ShouldBroadcastNow;

use Illuminate\Foundation\Events\Dispatchable;

use Illuminate\Queue\SerializesModels;

class ProductReceived implements ShouldBroadcastNow

{

use Dispatchable, InteractsWithSockets, SerializesModels;

public $product;

public function __construct(Product $product)

{

$this->product = $product;

}

public function broadcastOn()

{

return new Channel('crawler');

}

}

Next update the place where to broadcast the event, this will be in CrawlUrlCommand.php

CrawlUrlCommand.php

use App\Events\ProductReceived;

After this line:

$product->save();

$this->info("Item retrieved and saved");

Add this:

ProductReceived::dispatch($product);

To listen to the event update the scraper form component

ScraperForm.php

<?php

namespace App\Http\Livewire;

use Illuminate\Support\Facades\Artisan;

use Livewire\Component;

class ScraperForm extends Component

{

public $url;

public $products = [];

public $startScrape = false;

protected $rules = [

'url' => 'required|url'

];

protected $listeners = ['echo:crawler,ProductReceived' => 'onProductFetch'

];

public function scrape()

{

$this->validate();

try {

Artisan::queue("Url:Crawl", ["url" => $this->url])

->onConnection('database')->onQueue('processing');

$this->url = "";

$this->startScrape = true;

session()->flash("success", "Url Added Successfully to queue and it will be processed shortly!");

} catch (\Exception $ex) {

dd($ex);

}

}

public function updated($propertyName)

{

$this->validateOnly($propertyName);

}

public function onProductFetch($data)

{

array_unshift($this->products, $data['product']);

}

public function render()

{

return view('livewire.scraper-form');

}

}

And update scraper-form.blade.php

<div>

<h2 class="text-3xl font-bold">Enter url to scrape</h2>

<form method="post" class="mt-4" wire:submit.prevent="scrape">

@if(session()->has("success"))

<div class="bg-green-600 text-white rounded p-4 flex justify-start items-center">

<div>

<svg class="w-10" xmlns="http://www.w3.org/2000/svg" fill="none" viewBox="0 0 24 24" stroke="currentColor">

<path stroke-linecap="round" stroke-linejoin="round" stroke-width="2" d="M9 12l2 2 4-4m6 2a9 9 0 11-18 0 9 9 0 0118 0z" />

</svg>

</div>

<span>

{{ session("success") }}

</span>

</div>

@endif

<div class="flex flex-col mb-4">

<label class="mb-2 uppercase font-bold text-lg text-grey-darkest">Url</label>

<input type="text" wire:model.debounce.500ms="url" class="border border-gray-400 py-2 px-3 text-grey-darkest placeholder-gray-500" name="url" id="url" placeholder="Url..." />

@error('url') <span class="text-red-700">{{ $message }}</span> @enderror

<button type="submit" wire:loading.attr="disabled" class="block bg-red-400 hover:bg-teal-dark text-lg mx-auto p-4 rounded mt-3">Add to queue</button>

</div>

</form>

@if($startScrape)

<p>Products will be loaded shorlty! don't close the page...</p>

@endif

<div>

@if(count($products) > 0) <div class="flex items-center justify-left">Found <span class="rounded-full h-10 w-10 flex items-center justify-center bg-blue-500">{{ count($products) }}</span> Products</div> @endif

@foreach($products as $product)

<livewire:product :product="$product" :key="$product['id']" />

@endforeach

</div>

</div>

@push('scripts')

<script>

window.Echo.channel('crawler')

.listen('ProductReceived', (e) => {

//console.log(e.product);

});

</script>

@endpush

Now give this a try and add this url “https://shopee.co.id/shop/127192295/search” and click scrape you will see this message “Products will be loaded shortly…” then wait a couple of seconds you will see the products appear one by one in a nice way.

Problems and Considerations

- The example in this tutorial is limited to the above website i mentioned so if you need to extend the example to work with any website you can check my previous article in this link.

- You may face errors in symfony/panther like “the port is already in use” so try to not run two commands at the same time and wait until each command finish execution.

- Sometimes the css selectors you pass to the filtering methods in dom crawler return empty, you can try to use XPath selectors instead.

Awesome article! Thanks for sharing

after tried this code, the output was “the node was not found”, can you help me?